Apache Gluten + ClickHouse Backend开发环境搭建

文章目录

代码编译

环境

arch: 建议x86-64 system: 推荐ubuntu20或22版本 compiler: clang 19 cmake: 至少3.20

因为gluten需要和ClickHouse一块编译,因此首先得确保按照How to Build ClickHouse on Linux中初始化系统环境

编译Gluten

git clone https://github.com/apache/incubator-gluten

export MAVEN_OPTS="-Xmx32g -XX:ReservedCodeCacheSize=2g"

mvn clean package -Pbackends-clickhouse -Pspark-3.3 -Pceleborn -Piceberg -Dbuild_cpp=OFF -Dcpp_tests=OFF -Dbuild_arrow=OFF -Dbuild_protobuf=ON -Dbuild_jemalloc=ON -Dspark.gluten.sql.columnar.backend.lib=ch -Dspotless-check.skip -DfailIfNoTests=false -DskipTests -Pdelta

编译结束后能看到对应的jar包

$ cd backends-clickhouse/target

$ ll -rt *.jar

-rw-r--r-- 1 root root 59810700 Apr 3 14:22 backends-clickhouse-1.5.0-SNAPSHOT-tests.jar

-rw-r--r-- 1 root root 2127903 Apr 3 14:22 backends-clickhouse-1.5.0-SNAPSHOT.jar

-rw-r--r-- 1 root root 12132532 Apr 3 14:23 gluten-1.5.0-SNAPSHOT-spark-3.3-jar-with-dependencies.jar

-rw-r--r-- 1 root root 2127903 Apr 3 14:23 backends-clickhouse-1.5.0-SNAPSHOT-3.3.jar

编译ClickHouse

ClickHouse我们使用Kyligence专门维护的仓库

git clone https://github.com/Kyligence/ClickHouse

# 注意这里的<branch>对应着gluten中clickhouse.version中的分支,一般格式是rebase_ch/YYYYMMDD

git checkout <branch>

cd ClickHouse

cd contrib

bash update-submodules.sh

cd ../utils

# 将gluten中CH相关的cpp代码软连到CH工程中,方便后续联编译

ln -sf /path/to/gluten/cpp-ch/local-engine extern-local-engine

# 回到CH根目录

cd ..

bash build_gcc.sh

build_gcc.sh内容如下

mkdir -p build_gcc

cd build_gcc

# release only

cmake -G Ninja "-DCMAKE_EXPORT_COMPILE_COMMANDS=YES -DCMAKE_C_COMPILER=$(command -v clang-19)" "-DCMAKE_CXX_COMPILER=$(command -v clang++-19)" -DLLD_PATH="$(command -v ld.lld-18)" -DCMAKE_BUILD_TYPE=RelWithDebInfo -DENABLE_PROTOBUF=ON -DENABLE_TESTS=ON -DENABLE_JEMALLOC=ON -DENABLE_MULTITARGET_CODE=ON -DENABLE_EXTERN_LOCAL_ENGINE=ON -DENABLE_ODBC=OFF -DENABLE_CAPNP=OFF -DENABLE_GRPC=OFF -DENABLE_RUST=OFF -DENABLE_H3=OFF -DENABLE_AMQPCPP=OFF -DENABLE_CASSANDRA=OFF -DENABLE_KAFKA=ON -DENABLE_NATS=OFF -DENABLE_LIBPQXX=OFF -DENABLE_NURAFT=OFF -DENABLE_DATASKETCHES=OFF -DENABLE_SQLITE=OFF -DENABLE_S2_GEOMETRY=OFF -DENABLE_ANNOY=OFF -DENABLE_ULID=OFF -DENABLE_MYSQL=OFF -DENABLE_BCRYPT=OFF -DENABLE_LDAP=OFF -DENABLE_MSGPACK=OFF -DUSE_REPLXX=OFF -DENABLE_CLICKHOUSE_ALL=OFF -DENABLE_GWP_ASAN=OFF -DCOMPILER_FLAGS='-fvisibility=hidden -fvisibility-inlines-hidden' -DENABLE_THINLTO=OFF -DENABLE_AWS_S3=OFF -DENABLE_AZURE_BLOB_STORAGE=OFF -DENABLE_HIVE=1 -DENABLE_BENCHMARKS=ON -DENABLE_AWS_S3=0 -DENABLE_AVRO=0 -DENABLE_SSL=1 -DENABLE_SSH=0 ..

ninja libch

编译结束后能看到生成的共享库文件

cd build_gcc/utils/extern-local-engine

$ ll -rt libch.so

-rwxr-xr-x 1 root root 2337932552 May 15 16:50 libch.so

开发环境配置

ClickHouse

ClickHouse是个纯C++工程,推荐使用vscode + remote-ssh + clangd搭建开发环境。工程目录为/path/to/ClickHouse

Gluten

Gluten代码按照语言分成两个部分,以下分开讲解

一部分是scala/java, 工程目录为/path/to/gluten,使用IDEA remote模式开发。

注意 Gluten中maven profiles选项默认对CH Backend不生效,需要修改/path/to/gluten/pom.xml文件,

$ cat pom.patch

diff --git a/pom.xml b/pom.xml

index 03fe1f49e..54dba856c 100644

--- a/pom.xml

+++ b/pom.xml

@@ -69,13 +69,13 @@

<scala.binary.version>2.12</scala.binary.version>

<scala.version>2.12.15</scala.version>

<spark.major.version>3</spark.major.version>

- <sparkbundle.version>3.4</sparkbundle.version>

- <spark.version>3.4.4</spark.version>

- <sparkshim.artifactId>spark-sql-columnar-shims-spark34</sparkshim.artifactId>

+ <sparkbundle.version>3.3</sparkbundle.version>

+ <spark.version>3.3.1</spark.version>

+ <sparkshim.artifactId>spark-sql-columnar-shims-spark33</sparkshim.artifactId>

<iceberg.version>1.5.0</iceberg.version>

<delta.package.name>delta-core</delta.package.name>

- <delta.version>2.4.0</delta.version>

- <delta.binary.version>24</delta.binary.version>

+ <delta.version>2.3.0</delta.version>

+ <delta.binary.version>23</delta.binary.version>

<celeborn.version>0.5.4</celeborn.version>

<uniffle.version>0.9.2</uniffle.version>

<arrow.version>15.0.0</arrow.version>

@@ -301,20 +301,6 @@

<caffeine.version>3.1.8</caffeine.version>

</properties>

</profile>

- <profile>

- <id>spark-3.2</id>

- <properties>

- <sparkbundle.version>3.2</sparkbundle.version>

- <sparkshim.artifactId>spark-sql-columnar-shims-spark32</sparkshim.artifactId>

- <spark.version>3.2.2</spark.version>

- <iceberg.version>1.3.1</iceberg.version>

- <delta.package.name>delta-core</delta.package.name>

- <delta.version>2.0.1</delta.version>

- <delta.binary.version>20</delta.binary.version>

- <antlr4.version>4.8</antlr4.version>

- <hudi.version>0.15.0</hudi.version>

- </properties>

- </profile>

<profile>

<id>spark-3.3</id>

<properties>

@@ -329,50 +315,6 @@

<hudi.version>0.15.0</hudi.version>

</properties>

</profile>

- <profile>

- <id>spark-3.4</id>

- <properties>

- <sparkbundle.version>3.4</sparkbundle.version>

- <sparkshim.artifactId>spark-sql-columnar-shims-spark34</sparkshim.artifactId>

- <spark.version>3.4.4</spark.version>

- <iceberg.version>1.7.1</iceberg.version>

- <delta.package.name>delta-core</delta.package.name>

- <delta.version>2.4.0</delta.version>

- <delta.binary.version>24</delta.binary.version>

- <antlr4.version>4.9.3</antlr4.version>

- <hudi.version>0.15.0</hudi.version>

- </properties>

- </profile>

- <profile>

- <id>spark-3.5</id>

- <properties>

- <sparkbundle.version>3.5</sparkbundle.version>

- <sparkshim.artifactId>spark-sql-columnar-shims-spark35</sparkshim.artifactId>

- <spark.version>3.5.2</spark.version>

- <iceberg.version>1.5.0</iceberg.version>

- <delta.package.name>delta-spark</delta.package.name>

- <delta.version>3.2.0</delta.version>

- <delta.binary.version>32</delta.binary.version>

- <hudi.version>0.15.0</hudi.version>

- <fasterxml.version>2.15.1</fasterxml.version>

- <hadoop.version>3.3.4</hadoop.version>

- <antlr4.version>4.9.3</antlr4.version>

- </properties>

- <dependencies>

- <dependency>

- <groupId>org.slf4j</groupId>

- <artifactId>slf4j-api</artifactId>

- <version>${slf4j.version}</version>

- <scope>provided</scope>

- </dependency>

- <dependency>

- <groupId>org.apache.logging.log4j</groupId>

- <artifactId>log4j-slf4j2-impl</artifactId>

- <version>${log4j.version}</version>

- <scope>provided</scope>

- </dependency>

- </dependencies>

- </profile>

<profile>

<id>hadoop-2.7.4</id>

<properties>

然后在Maven Project窗口中reload

一部分为CH backend相关的cpp代码,工程目录为/path/to/gluten/cpp-ch/local-engine, 同样使用vscode + remote-ssh + clangd。

注意 该工程和ClickHouse工程共享同一个compile_commands.json,所以需要创建软连

ln -sf /path/to/clickhouse/build_gcc/compile_commands ./compile_commands.json

在提交代码之前,需要对已改动文件批量进行格式化:

$ cat tidy.sh

prefix=`pwd`

files=`git status . | grep -e "modified:" -e "new file:" | awk -F':' '{print $2}' | awk -v prefix=${prefix} '{print prefix"/"$1}' | grep -e java -e scala | tr "\n" ","`

mvn spotless:apply -DspotlessFiles="${new_files}" -Pbackends-clickhouse -Pspark-3.3 -Pceleborn -Pspark-ut -Pceleborn

提交PR之后,有时候需要手动下载log文件,方便定位问题:

$ cat get_log.sh

curl https://gluten:passwdm@opencicd.kyligence.com/job/gluten/job/gluten-ci/$1/consoleText > $1.log

$ cat get_armlog.sh

curl https://gluten:passwd@opencicd.kyligence.com/job/gluten/job/gluten-ci-arm/$1/consoleText > $1.log

测试

Gluten

手动单测

手动单测通过maven命令行启动, 可修改-Dsuites参数值中的类名切换不同的test suite.

LD_PRELOAD=/path/to/clickhouse/build_gcc/utils/extern-local-engine/libch.so

mvn test -Pspark-3.3 -Dtest=None -Dsuites="org.apache.gluten.execution.hive.GlutenClickHouseHiveTableSuite*" -DfailIfNoTests=false -Pspark-ut -Pbackends-clickhouse -Pceleborn -Pdelta -Piceberg -Dbuild_cpp=OFF -Dcpp_tests=OFF -Dbuild_arrow=OFF -Dbuild_protobuf=ON -Dbuild_jemalloc=ON -Dspark.gluten.sql.columnar.backend.lib=ch -Dcheckstyle.skip=true -Dscalastyle.skip -Dcheckstyle.skip -Dspotless-check.skip -DargLine="-Dspark.test.home=/path/to/test/home -Dspark.gluten.sql.columnar.libpath=/data1/liyang/cppproject/kyli/ClickHouse/build_gcc/utils/extern-local-engine/libch.so" -Dtpcds.data.path=/data1

IDEA单测

首先在IDEA的gluten工程中的maven project窗口中,添加gluten-ut/spark3.3和celeborn/clickhouse (如果还有其他未添加的folder, 也可按需添加)

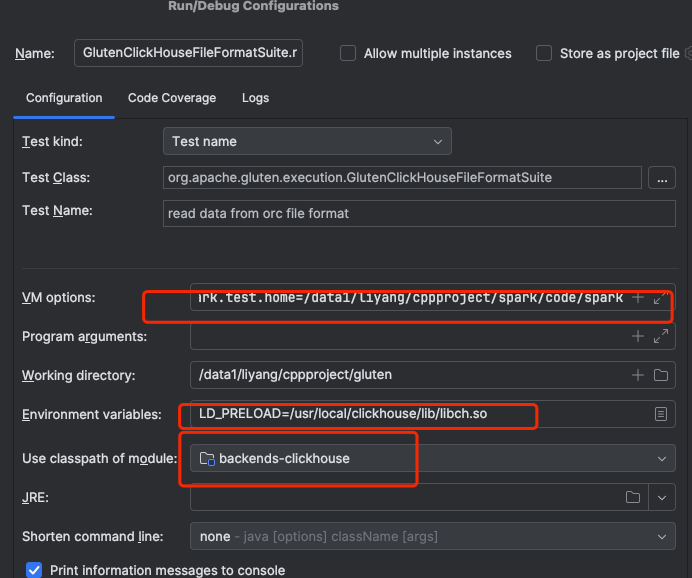

对于org.apache.gluten.*下的test suite, 修改对应的"Run/Debug Configurations”

其中

- VM options为

-Dspark.gluten.sql.columnar.backend.lib=ch -Dspark.gluten.sql.columnar.libpath=/path/to/ClickHouse/build_gcc/utils/extern-local-engine/libch.so -Dspark.test.home=/path/to/test/home -Dtpcds.data.path=/data1 - Environment variables:

LD_PRELOAD=/path/to/clickhouse/build_gcc/utils/extern-local-engine/libch.so - Use class of module要选

backends-clickhouse

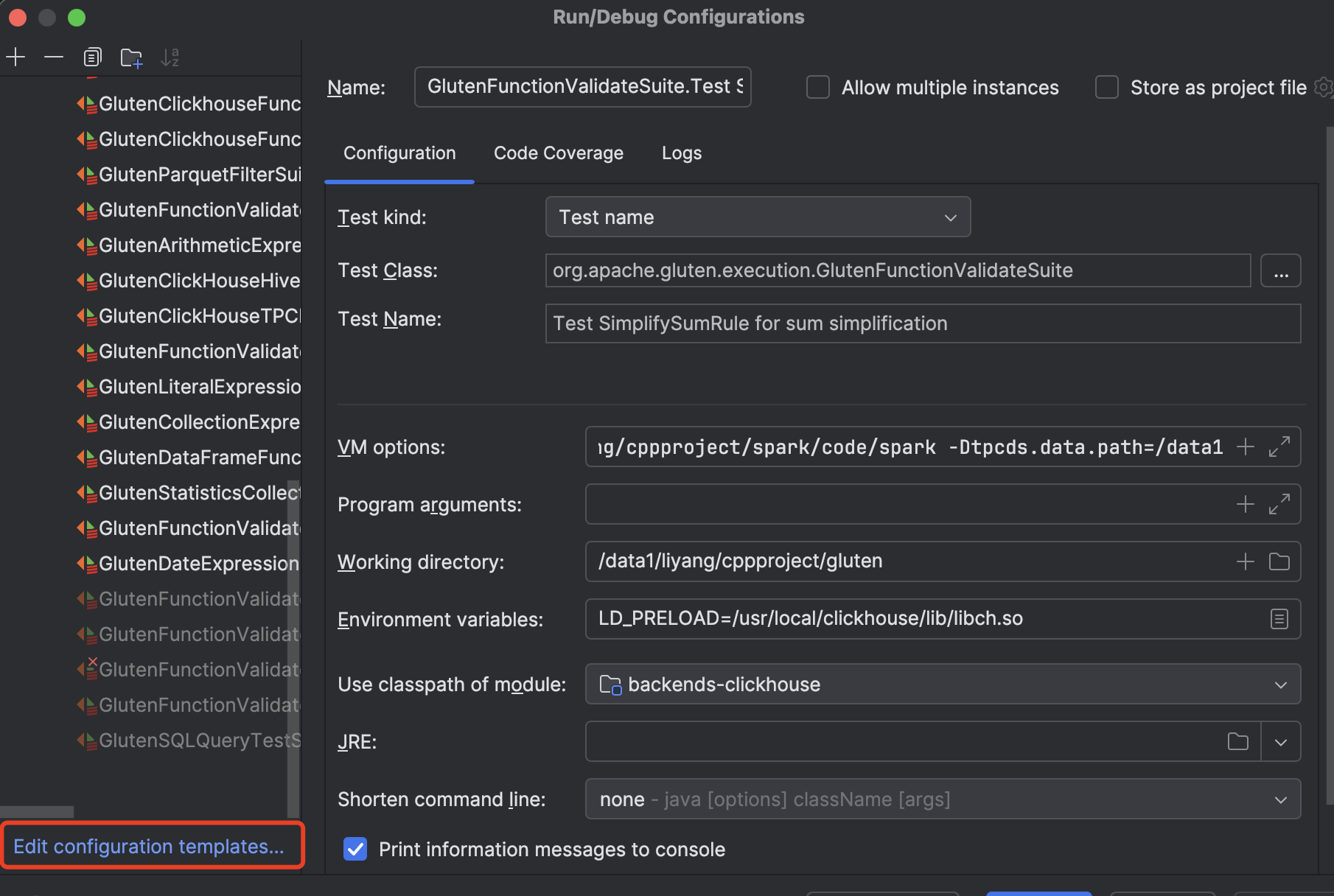

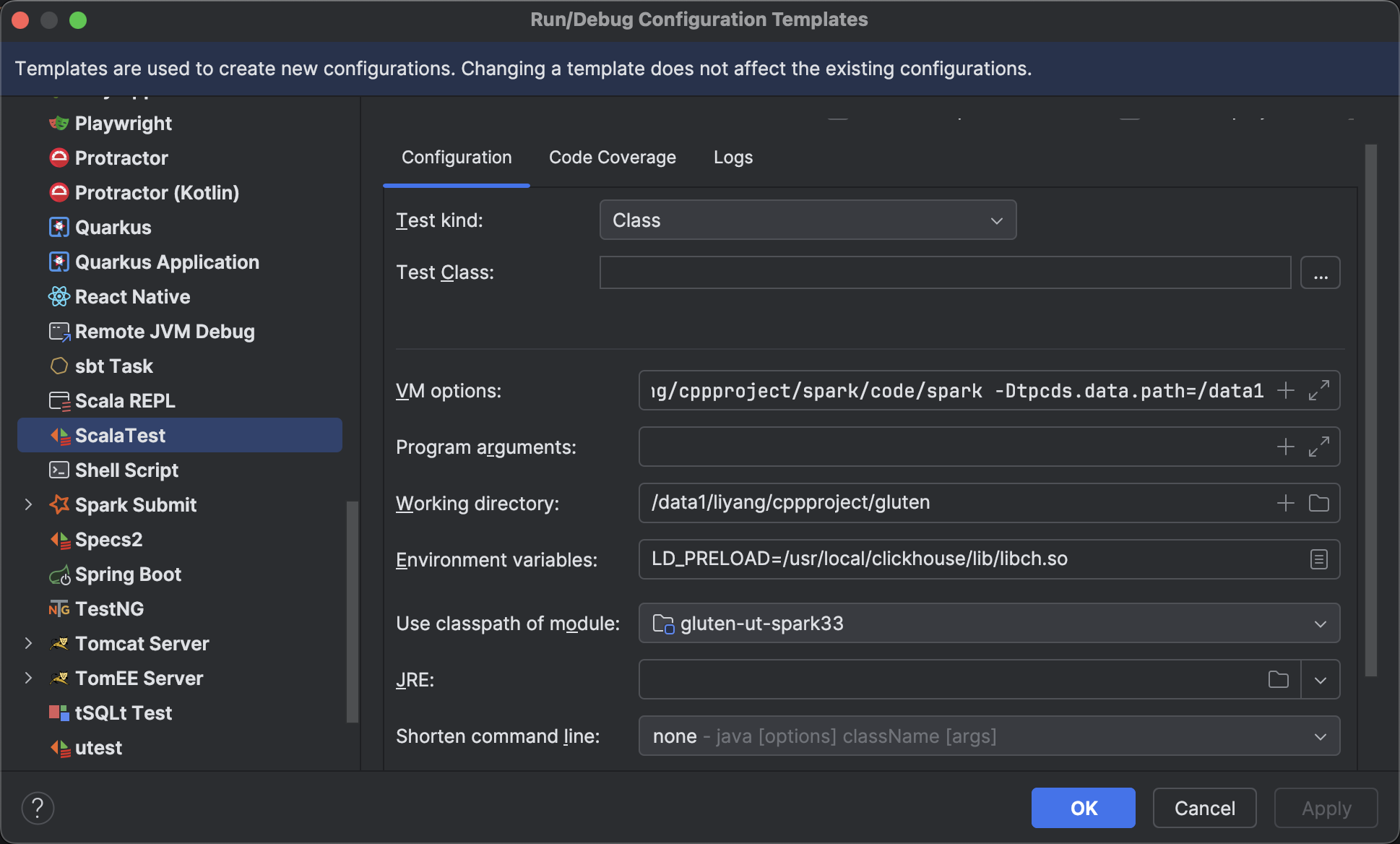

对于org.apache.spark.*下的test suite, 首先修改对应的配置模板

其中

- VM options同上

- Environment variables同上

- Use calss of module要选

gluten-ut-spark33

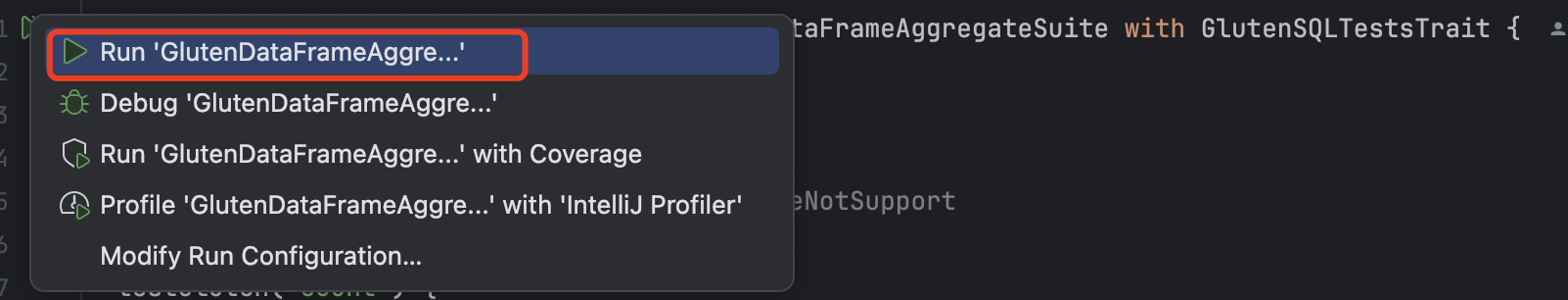

配置完成后即可运行test suite或某个test case

注意: 有时候跑ut build阶段会失败,此时可尝试先执行mvn clean, 再按照编译Gluten中的命令手动编译,最后重新运行ut。

CH

CH既包含单元测试,也包含benchmark测试。

CH单元测试

首先将编译CH中build_gcc.sh中的ninja libch替换成ninja unit_tests_local_engine

然后运行单测

./build_gcc/utils/local-engine/tests/unit_tests_local_engine --gtest_filter="xxx"

CH benchmark测试

首先将编译CH中build_gcc.sh中的ninja libch替换成ninja benchmark_local_engine

然后运行单测

./build_gcc/utils/local-engine/tests/benchmark_local_engine --benchmark_filter="xxx"

本地运行

主要参考官方文档

最后补充下TPC-H数据集对应的建表sql

CREATE TEMPORARY VIEW lineitem

USING org.apache.spark.sql.parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/gluten-core/src/test/resources/tpch-data/lineitem"

) ;

CREATE TEMPORARY VIEW orders

USING org.apache.spark.sql.parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/gluten-core/src/test/resources/tpch-data/orders"

) ;

CREATE TEMPORARY VIEW part

USING org.apache.spark.sql.parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/gluten-core/src/test/resources/tpch-data/part"

) ;

CREATE TEMPORARY VIEW customer

USING org.apache.spark.sql.parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/gluten-core/src/test/resources/tpch-data/customer"

);

CREATE TEMPORARY VIEW supplier

USING org.apache.spark.sql.parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/jvm/src/test/resources/tpch-data/supplier"

) ;

CREATE TEMPORARY VIEW partsupp

USING org.apache.spark.sql.parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/jvm/src/test/resources/tpch-data/partsupp"

) ;

CREATE TEMPORARY VIEW nation

USING parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/gluten-core/src/test/resources/tpch-data/nation"

) ;

CREATE TEMPORARY VIEW region

USING org.apache.spark.sql.parquet

OPTIONS (

path "/data1/liyang/cppproject/gluten/jvm/src/test/resources/tpch-data/region"

) ;

文章作者 后端侠

上次更新 2025-05-21